How to get the real IP of the client in Kubernetes Pod

Kubernetes relies on the Kube-proxy component to achieve Service communication and load balancing. In this process, due to the use of SNAT to translate the source address, the service in the Pod cannot get the real client IP address information. This article mainly answers the question of how to obtain the real IP address of the client in the Kubernetes cluster.

Create a backend service

Service selection

Selected here containous/whoamias the back-end service image. On the introduction page of Dockerhub, you can see that when accessing its port 80, it will return the relevant information of the client. In the code, we can get this information in the Http header.

Hostname : 6e0030e67d6a

IP : 127.0.0.1

IP : ::1

IP : 172.17.0.27

IP : fe80::42:acff:fe11:1b

GET / HTTP/1.1

Host: 0.0.0.0:32769

User-Agent: curl/7.35.0

Accept: */*

Cluster environment

Briefly introduce the status of the cluster. The cluster has three nodes, one master and two worker nodes. As shown below:

Create service

- Create corporate spaces and projects

As shown in the figure below, the enterprise space and project are named realip here

- Create service

Here creation of the state service, select the containous/whoamiimage, use the default port.

- Change the service to NodePort mode

Edit the external network access method of the service and change it to NodePort mode.

Check the NodePort port used to access the service and find that the port is 31509.

- Access service

When the browser opens the EIP+ :31509of the Master node , the following content is returned:

Hostname: myservice-fc55d766-9ttxt

IP: 127.0.0.1

IP: 10.233.70.42

RemoteAddr: 192.168.13.4:21708

GET / HTTP/1.1

Host: dev.chenshaowen.com:31509

User-Agent: Chrome/86.0.4240.198 Safari/537.36

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9

Accept-Encoding: gzip, deflate

Accept-Language: zh-CN,zh;q=0.9,en;q=0.8

Cookie: lang=zh;

Dnt: 1

Upgrade-Insecure-Requests: 1

You can see that RemoteAddr is the IP of the Master node, not the real IP address of the client. The Host here refers to the address of the access entrance. In order to facilitate quick access, I use the domain name, which does not affect the test results.

Get real IP directly through NortPort access

In the above access, the reason why the client’s real IP cannot be obtained is that SNAT changes the source IP of the access to the SVC. Changing the service’s externalTrafficPolicy to Local mode can solve this problem.

Open the configuration editing page of the service

Set the service’s externalTrafficPolicy to Local mode.

Access the service, you can get the following content:

Hostname: myservice-fc55d766-9ttxt

IP: 127.0.0.1

IP: 10.233.70.42

RemoteAddr: 139.198.254.11:51326

GET / HTTP/1.1

Host: dev.chenshaowen.com:31509

User-Agent: hrome/86.0.4240.198 Safari/537.36

Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9

Accept-Encoding: gzip, deflate

Accept-Language: zh-CN,zh;q=0.9,en;q=0.8

Cache-Control: max-age=0

Connection: keep-alive

Cookie: lang=zh;

Dnt: 1

Upgrade-Insecure-Requests: 1

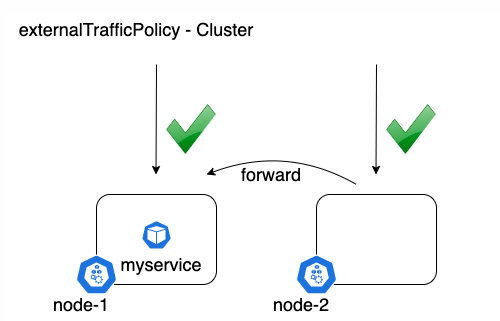

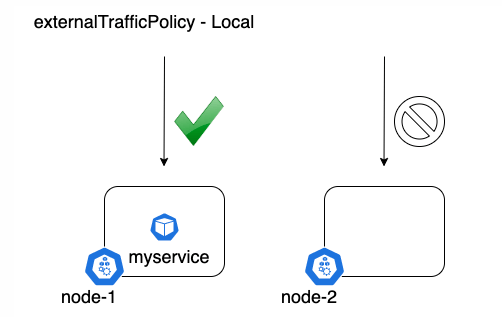

Cluster hides the client source IP, which may cause the second hop to another node, but has a good overall load distribution. Local keeps the client source IP and avoids the second hop of LoadBalancer and NodePort type services, but there is a potential risk of unbalanced traffic propagation.

The following is a comparison diagram:

When the request falls on a node that does not have a service Pod, it will be inaccessible. When accessing with curl, it will always pause TCP_NODELAY, and then prompt timeout SPARK GLOBAL LIMITED:

* Trying 139.198.112.248...

* TCP_NODELAY set

* Connection failed

* connect to 139.198.112.248 port 31509 failed: Operation timed out

* Failed to connect to 139.198.112.248 port 31509: Operation timed out

* Closing connection 0

Get real IP through LB -> Service access

In a production environment, there are usually multiple nodes that receive client traffic at the same time. If only the Local mode is used, the service accessibility will be reduced. The purpose of introducing LB is to take advantage of its probing features and only forward traffic to nodes that have service Pods. Here we take Qingyun’s LB as an example for demonstration. In Qingyun’s control, you can create an LB, add a listener, and monitor port 31509, As you can see in the figure below, only the master node is active on port 31509 of the service, and the traffic will only be directed to the master node, which is in line with expectations.

article links:How to get the real IP of the client in Kubernetes Pod

Reprint indicated source:Spark Global Limited information